Tock OS Book

Getting Started

The book includes a quick start guide.

Tock Workshop Courses

For a more in-depth walkthough-style less, look here.

Development Guides

The book also has walkthoughs on how to implement different features in Tock OS.

Getting Started

This getting started guide covers how to get started using Tock.

Hardware

To really be able to use Tock and get a feel for the operating system, you will need a hardware platform that tock supports. The TockOS Hardware includes a list of supported hardware boards. You can also view the boards folder to see what platforms are supported.

As of February 2021, this getting started guide is based around five hardware

platforms. Steps for each of these platforms are explicitly described here.

Other platforms will work for Tock, but you may need to reference the README

files in tock/boards/ for specific setup information. The five boards are:

- Hail

- imix

- nRF52840dk (PCA10056)

- Arduino Nano 33 BLE (regular or Sense version)

- BBC Micro:bit v2

These boards are reasonably well supported, but note that others in Tock may have some "quirks" around what is implemented (or not), and exactly how to load code and test that it is working. This guides tries to be general, and Tock generally tries to follow a certain convention, but the project is under active development and new boards are added rapidly. You should definitely consult the board-specific README to see if there are any board-specific details you should be aware of.

When you are ready to use your board, see the hardware setup guide for information on any needed setup to get the board working with your machine.

Software

Tock, like many computing systems, is split between a kernel and userspace apps. These are developed, compiled, and loaded separately.

First, complete the quickstart guide to get all of the necessary tools installed.

The kernel is available in the Tock repository. See here for information on getting started.

Userspace apps are compiled and loaded separately from the kernel. You can install one or more apps without having to update or re-flash the kernel. See here for information on getting started.

Quickstart

Get started with Tock quickly! The general requirements are:

- Rustup

- Tockloader

- GCC toolchains for ARM and RISC-V

- Code loading tool

Choose the guide for your platform:

Quickstart: Mac

This guide assumes you have the Homebrew package manager installed.

Install the following:

-

Command line utilities.

$ brew install wget pipx git coreutils -

Clone the Tock kernel repository.

$ git clone https://github.com/tock/tock -

rustup. This tool helps manage installations of the Rust compiler and related tools.

$ curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh -

arm-none-eabi toolchain and riscv64-unknown-elf toolchains. This enables you to compile apps written in C.

$ brew install arm-none-eabi-gcc riscv64-elf-gcc -

tockloader. This is an all-in-one tool for programming boards and using Tock.

$ pipx install tockloaderNote: You may need to add

tockloaderto your path. If you cannot run it after installation, run the following:$ pipx ensurepath -

JLinkExeto load code onto your board.JLinkis available from the Segger website. You want to install the "J-Link Software and Documentation Pack". There are various packages available depending on operating system. -

OpenOCD. Another tool to load code. You can install through package managers.

$ brew install open-ocd

Quickstart: Linux

Install the following:

-

Command line utilities.

$ sudo apt install git wget zip curl python3 python3-pip python3-venv -

Clone the Tock kernel repository.

$ git clone https://github.com/tock/tock -

rustup. This tool helps manage installations of the Rust compiler and related tools.

$ curl --proto '=https' --tlsv1.2 -sSf https://sh.rustup.rs | sh -

arm-none-eabi toolchain and riscv64-unknown-elf toolchains. This enables you to compile apps written in C.

$ sudo apt install gcc-arm-none-eabi gcc-riscv64-unknown-elf -

tockloader. This is an all-in-one tool for programming boards and using Tock.

$ pipx install tockloaderNote: You may need to add

tockloaderto your path. If you cannot run it after installation, run the following:$ pipx ensurepath -

JLinkExeto load code onto your board.JLinkis available from the Segger website. You want to install the "J-Link Software and Documentation Pack". There are various packages available depending on operating system. -

OpenOCD. Another tool to load code. You can install through package managers.

$ sudo apt install openocd

One-Time Fixups

On Linux, you might need to give your user access to the serial port used by the board. If you get permission errors or you cannot access the serial port, this is likely the issue.

You can fix this by setting up a udev rule to set the permissions correctly for the serial device when it is attached. You only need to run the command below for your specific board, but if you don't know which one to use, running both is totally fine, and will set things up in case you get a different hardware board!

$ sudo bash -c "echo 'ATTRS{idVendor}==\"0403\", ATTRS{idProduct}==\"6015\", MODE=\"0666\"' > /etc/udev/rules.d/99-ftdi.rules" $ sudo bash -c "echo 'ATTRS{idVendor}==\"2341\", ATTRS{idProduct}==\"005a\", MODE=\"0666\"' > /etc/udev/rules.d/98-arduino.rules"Afterwards, detach and re-attach the board to reload the rule.

Quickstart: Windows

Note: This is a work in progress. Any contributions are welcome!

We use WSL on Windows for Tock.

Install Tools

Configure WSL To Use USB

On Windows Subsystem for Linux (WSL)

Programming JLink devices with Tock in WSL:

Trying to program an nRF52840DK with WSL can be a little tricky because WSL abstracts away low level access for USB devices. WSL1 does not offer access to physical hardware, just an environment to use linux on microsoft. WSL2 on the other hand is unable to find JLink devices even if you have JLink installed because of the USB abstraction. To get around this limitation, we use USBIP - a tool that connects the USB device over a TCP tunnel.

This guide might apply for any device programmed via JLink.

Steps to connect to nRF52840DK with WSL:

-

Get Ubuntu 22.04 from Microsoft store. Install it as a WSL distro with

wsl --install -d Ubuntu-22.04using Windows Powershell or Cmd prompt with admin privileges. -

Once Ubuntu 22.04 is installed, the Ubuntu 20.04 distro that ships as default with WSL must be uninstalled. Set the 22.04 distro as the WSL default by with the

wsl --setdefault Ubuntu-22.04command. -

Install JLink's linux package from their website on your WSL linux distro. You may need to modify jlink rules to allow JLink to access the nRF52840DK. This can be done with

sudo nano /etc/udev/rules.d/99-jlink.rulesand addingSUBSYSTEM=="tty", ATTRS{idVendor}=="1051", MODE="0666", GROUP="dialout"to the file. -

Next, the udev rules have to be reloaded and triggered with

sudo udevadm control --reload-rules && udevadm trigger. Doing this should apply the new rules. -

On the windows platform, make sure WSL is set to version 2. Check the WSL version with

wsl -l -v. If it is version 1, change it to WSL2 withwsl --set-version Ubuntu-22.04 2(USBIP works with WSL2). -

Install USBIP from here. Version 4.x onwards removes USBIP tooling requirement from the client side, so you don't have to install anything on the linux subsystem.

-

On windows, open powershell/cmd in admin mode and run

usbipd wsl list. That should give you the list of devices. Note the Bus ID of your J-Link device. -

For the first time that you want to attach your device, you need to bind the bus between the host OS and the WSL using

usbipd bind -b <bus-id>. -

Once bound, you can attach your device to WSL by running

usbipd attach --wsl -b <busid>on powershell/cmd (When attaching a device for the first time, it has to be done with admin privileges). -

To check if the attach worked, run

lsusbon WSL. If it worked, the device should be listed asSEGGER JLink. -

The kernel can now be flashed with

make installand other tockloader commands should work.

Note:

- A machine with an x64 processor is required. (x86 and Arm64 are currently not supported with USBIP).

- Make sure your firewall is not blocking port 3240 as USBIP uses that port to interface windows and WSL. (Windows defender is usually the culprit if you don't have a third party firewall).

- Add an inbound rule to Windows defender/ your third party firewall allowing USBIP to use port 3240 if you see a port blocked error.

One-Time Fixups

The serial device parameters stored in the FTDI chip do not seem to get passed to Ubuntu. Plus, WSL enumerates every possible serial device. Therefore, tockloader cannot automatically guess which serial port is the correct one, and there are a lot to choose from.

You will need to open Device Manager on Windows, and find which

COMport the tock board is using. It will likely be called "USB Serial Port" and be listed as an FTDI device. The COM number will match what is used in WSL. For example,COM9is/dev/ttyS9on WSL.To use tockloader you should be able to specify the port manually. For example:

tockloader --port /dev/ttyS9 list.

Getting the Hardware Connected and Setup

Plug your hardware board into your computer. Generally this requires a micro USB cable, but your board may be different.

Note! Some boards have multiple USB ports.

Some boards have two USB ports, where one is generally for debugging, and the other allows the board to act as any USB peripheral. You will want to connect using the "debug" port.

Some example boards:

- imix: Use the port labeled

DEBUG.- nRF52 development boards: Use the port on the skinny side of the board (do NOT use the port labeled "nRF USB").

The board should appear as a regular serial device (e.g.

/dev/tty.usbserial-c098e5130006 on my Mac or /dev/ttyUSB0 on my Linux box).

On Linux, this may require some setup, see the "one-time fixups" box on the

quickstart page for your platform (Linux or

Windows].

One Time Board Setup

If you have a Hail, imix, or nRF52840dk please skip to the next section.

If you have an Arduino Nano 33 BLE (sense or regular), you need to update the bootloader on the board to the Tock bootloader. Please follow the bootloader update instructions.

If you have a Micro:bit v2 then you need to load the Tock bootloader. Please follow the bootloader installation instructions.

Test The Board

With the board connected, you should be able to use tockloader to interact with

the board. For example, to retrieve serial UART data from the board, run

tockloader listen, and you should see something like:

$ tockloader listen

No device name specified. Using default "tock"

Using "/dev/ttyUSB0 - Imix - TockOS"

Listening for serial output.

Initialization complete. Entering main loop

You may also need to reset (by pressing the reset button on the board) the board to see the message. You may also not see any output if the Tock kernel has not been flashed yet.

In case you have multiple serial devices attached to your computer, you may need to select the appropriate J-Link device:

$ tockloader listen

[INFO ] No device name specified. Using default name "tock".

[INFO ] No serial port with device name "tock" found.

[INFO ] Found 2 serial ports.

Multiple serial port options found. Which would you like to use?

[0] /dev/ttyACM1 - J-Link - CDC

[1] /dev/ttyACM0 - L830-EB - Fibocom L830-EB

Which option? [0] 0

[INFO ] Using "/dev/ttyACM1 - J-Link - CDC".

[INFO ] Listening for serial output.

Initialization complete. Entering main loop

NRF52 HW INFO: Variant: AAC0, Part: N52840, Package: QI, Ram: K256, Flash: K1024

tock$

In case you don't see any text printed after "Listening for serial output", try

hitting [ENTER] a few times. You should be greeted with a tock$ shell

prompt. You can use the reset command to restart your nRF chip and see the

above greeting.

In case you want to use a different serial console monitor, you may need to identify the serial console device created for your board. On Linux, you can identify the J-Link debugger's serial port by running:

$ dmesg -Hw | grep tty

< ... some output ... >

< plug in the nRF52840DKs front USB (not "nRF USB") >

[ +0.003233] cdc_acm 1-3:1.0: ttyACM1: USB ACM device

In this case, the serial console can be accessed as /dev/ttyACM1.

You can also see if any applications are installed with tockloader list:

$ tockloader list

[INFO ] No device name specified. Using default name "tock".

[INFO ] Using "/dev/cu.usbmodem14101 - Nano 33 BLE - TockOS".

[INFO ] Paused an active tockloader listen in another session.

[INFO ] Waiting for the bootloader to start

[INFO ] No found apps.

[INFO ] Finished in 2.928 seconds

[INFO ] Resumed other tockloader listen session

If these commands fail you may not have installed Tockloader, or you may need to update to a later version of Tockloader. There may be other issues as well, and you can ask on Slack if you need help.

Testing You Can Compile the Kernel

To test if your environment is working enough to compile Tock, go to the

tock/boards/ directory and then to the board folder for the hardware you have

(e.g. tock/boards/imix for imix). Then run make in that directory. This

should compile the kernel. It may need to compile several supporting libraries

first (so may take 30 seconds or so the first time). You should see output like

this:

$ cd tock/boards/imix

$ make

Compiling tock-cells v0.1.0 (/Users/bradjc/git/tock/libraries/tock-cells)

Compiling tock-registers v0.5.0 (/Users/bradjc/git/tock/libraries/tock-register-interface)

Compiling enum_primitive v0.1.0 (/Users/bradjc/git/tock/libraries/enum_primitive)

Compiling tock-rt0 v0.1.0 (/Users/bradjc/git/tock/libraries/tock-rt0)

Compiling imix v0.1.0 (/Users/bradjc/git/tock/boards/imix)

Compiling kernel v0.1.0 (/Users/bradjc/git/tock/kernel)

Compiling cortexm v0.1.0 (/Users/bradjc/git/tock/arch/cortex-m)

Compiling capsules v0.1.0 (/Users/bradjc/git/tock/capsules)

Compiling cortexm4 v0.1.0 (/Users/bradjc/git/tock/arch/cortex-m4)

Compiling sam4l v0.1.0 (/Users/bradjc/git/tock/chips/sam4l)

Compiling components v0.1.0 (/Users/bradjc/git/tock/boards/components)

Finished release [optimized + debuginfo] target(s) in 28.67s

text data bss dec hex filename

165376 3272 54072 222720 36600 /Users/bradjc/git/tock/target/thumbv7em-none-eabi/release/imix

Compiling typenum v1.11.2

Compiling byteorder v1.3.4

Compiling byte-tools v0.3.1

Compiling fake-simd v0.1.2

Compiling opaque-debug v0.2.3

Compiling block-padding v0.1.5

Compiling generic-array v0.12.3

Compiling block-buffer v0.7.3

Compiling digest v0.8.1

Compiling sha2 v0.8.1

Compiling sha256sum v0.1.0 (/Users/bradjc/git/tock/tools/sha256sum)

6fa1b0d8e224e775d08e8b58c6c521c7b51fb0332b0ab5031fdec2bd612c907f /Users/bradjc/git/tock/target/thumbv7em-none-eabi/release/imix.bin

You can check that tockloader is installed by running:

$ tockloader --help

If either of these steps fail, please double check that you followed the environment setup instructions above.

Flash the kernel

Now that the board is connected and you have verified that the kernel compiles (from the steps above), we can flash the board with the latest Tock kernel:

$ cd boards/<your board>

$ make

Boards provide the target make install as the recommended way to load the

kernel.

$ make install

You can also look at the board's README for more details.

Installing Tock Applications

We have the kernel flashed, but the kernel doesn't actually do anything. Applications do! To load applications, we are going to use tockloader.

Loading Pre-built Applications

We're going to install some pre-built applications, but first, let's make sure we're in a clean state, in case your board already has some applications installed. This command removes any processes that may have already been installed.

$ tockloader erase-apps

Now, let's install two pre-compiled example apps. Remember, you may need to specify which board you are using and how to communicate with it for all of these commands. If you are using Hail or imix you will not have to.

$ tockloader install https://www.tockos.org/assets/tabs/blink.tab

The

installsubcommand takes a path or URL to an TAB (Tock Application Binary) file to install.

The board should restart and the user LED should start blinking. Let's also install a simple "Hello World" application:

$ tockloader install https://www.tockos.org/assets/tabs/c_hello.tab

If you now run tockloader listen you should be able to see the output of the

Hello World! application. You may need to manually reset the board for this to

happen.

$ tockloader listen

[INFO ] No device name specified. Using default name "tock".

[INFO ] Using "/dev/cu.usbserial-c098e513000a - Hail IoT Module - TockOS".

[INFO ] Listening for serial output.

Initialization complete. Entering main loop.

Hello World!

␀

Uninstalling and Installing More Apps

Lets check what's on the board right now:

$ tockloader list

...

┌──────────────────────────────────────────────────┐

│ App 0 |

└──────────────────────────────────────────────────┘

Name: blink

Enabled: True

Sticky: False

Total Size in Flash: 2048 bytes

┌──────────────────────────────────────────────────┐

│ App 1 |

└──────────────────────────────────────────────────┘

Name: c_hello

Enabled: True

Sticky: False

Total Size in Flash: 1024 bytes

[INFO ] Finished in 2.939 seconds

As you can see, the apps are still installed on the board. We can remove apps with the following command:

$ tockloader uninstall

Following the prompt, if you remove the blink app, the LED will stop blinking,

however the console will still print Hello World.

Now let's try adding a more interesting app:

$ tockloader install https://www.tockos.org/assets/tabs/sensors.tab

The sensors app will automatically discover all available sensors, sample them

once a second, and print the results.

$ tockloader listen

[INFO ] No device name specified. Using default name "tock".

[INFO ] Using "/dev/cu.usbserial-c098e513000a - Hail IoT Module - TockOS".

[INFO ] Listening for serial output.

Initialization complete. Entering main loop.

[Sensors] Starting Sensors App.

Hello World!

␀[Sensors] All available sensors on the platform will be sampled.

ISL29035: Light Intensity: 218

Temperature: 28 deg C

Humidity: 42%

FXOS8700CQ: X: -112

FXOS8700CQ: Y: 23

FXOS8700CQ: Z: 987

Compiling and Loading Applications

There are many more example applications in the libtock-c repository that you

can use. Let's try installing the ROT13 cipher pair. These two applications use

inter-process communication (IPC) to implement a

ROT13 cipher.

Start by uninstalling any applications:

$ tockloader uninstall

Get the libtock-c repository:

$ git clone https://github.com/tock/libtock-c

Build the rot13_client application and install it:

$ cd libtock-c/examples/rot13_client

$ make

$ tockloader install

Then make and install the rot13_service application:

$ cd ../rot13_service

$ tockloader install --make

Then you should be able to see the output:

$ tockloader listen

[INFO ] No device name specified. Using default name "tock".

[INFO ] Using "/dev/cu.usbserial-c098e5130152 - Hail IoT Module - TockOS".

[INFO ] Listening for serial output.

Initialization complete. Entering main loop.

12: Uryyb Jbeyq!

12: Hello World!

12: Uryyb Jbeyq!

12: Hello World!

12: Uryyb Jbeyq!

12: Hello World!

12: Uryyb Jbeyq!

12: Hello World!

Note: Tock platforms are limited in the number of apps they can load and run. However, it is possible to install more apps than this limit, since tockloader is (currently) unaware of this limitation and will allow to you to load additional apps. However the kernel will only load the first apps until the limit is reached.

Note about Identifying Boards

Tockloader tries to automatically identify which board is attached to make this process simple. This means for many boards (particularly the ones listed at the top of this guide) tockloader should "just work".

However, for some boards tockloader does not have a good way to identify which

board is attached, and requires that you manually specify which board you are

trying to program. This can be done with the --board argument. For example, if

you have an nrf52dk or nrf52840dk, you would run Tockloader like:

$ tockloader <command> --board nrf52dk --jlink

The --jlink flag tells tockloader to use the JLink JTAG tool to communicate

with the board (this mirrors using make flash above). Some boards support

OpenOCD, in which case you would pass --openocd instead.

To see a list of boards that tockloader supports, you can run

tockloader list-known-boards. If you have an imix or Hail board, you should

not need to specify the board.

Note, a board listed in

tockloader list-known-boardsmeans there are default settings hardcoded into tockloader's source on how to support those boards. However, all of those settings can be passed in via command-line parameters for boards that tockloader does not know about. Seetockloader --helpfor more information.

Familiarize Yourself with tockloader Commands

The tockloader tool is a useful and versatile tool for managing and installing

applications on Tock. It supports a number of commands, and a more complete list

can be found in the tockloader repository, located at

github.com/tock/tockloader. Below is

a list of the more useful and important commands for programming and querying a

board.

tockloader install

This is the main tockloader command, used to load Tock applications onto a

board. By default, tockloader install adds the new application, but does not

erase any others, replacing any already existing application with the same name.

Use the --no-replace flag to install multiple copies of the same app. To

install an app, either specify the tab file as an argument, or navigate to the

app's source directory, build it (probably using make), then issue the install

command:

$ tockloader install

Tip: You can add the

--makeflag to have tockloader automatically run make before installing, i.e.tockloader install --make

Tip: You can add the

--eraseflag to have tockloader automatically remove other applications when installing a new one.

tockloader uninstall [application name(s)]

Removes one or more applications from the board by name.

tockloader erase-apps

Removes all applications from the board.

tockloader list

Prints basic information about the apps currently loaded onto the board.

tockloader info

Shows all properties of the board, including information about currently loaded applications, their sizes and versions, and any set attributes.

tockloader listen

This command prints output from Tock apps to the terminal. It listens via UART, and will print out anything written to stdout/stderr from a board.

Tip: As a long-running command,

listeninteracts with other tockloader sessions. You can leave a terminal window open and listening. If another tockloader process needs access to the board (e.g. to install an app update), tockloader will automatically pause and resume listening.

tockloader flash

Loads binaries onto hardware platforms that are running a compatible bootloader.

This is used by the Tock Make system when kernel binaries are programmed to the

board with make program.

Tock Course

The Tock course includes several different modules that guide you through various aspects of Tock and Tock applications. Each module is designed to be fairly standalone such that a full course can be composed of different modules depending on the interests and backgrounds of those doing the course. You should be able to do the lessons that are of interest to you.

Each module begins with a description of the lesson, and then includes steps to follow. The modules cover both programming in the kernel as well as applications.

Setup and Preparation

You should follow the getting started guide to get your development setup and ensure you can communicate with the hardware.

Which Tutorial Should You Follow?

All tutorials explore Tock and running applications, but they focus on different subsystems and different application areas for Tock. If you are interested in learning about a specific feature or subsystem this table tries to help direct you to the most relevant tutorial.

| Aspect of Tock | Tutorial |

|---|---|

| Applications | |

| Hardware Root-of-Trust | Root of Trust |

| Fido Security Key | USB Security Key |

| Wireless Networking | Thread Networking |

| Topics | |

| Process Isolation | Root of Trust |

| Inter-process Communication | Thread Networking, Dynamic Apps |

| Kernel Configuration | Dynamic Apps |

| Skills | |

| Writing Apps | USB Security Key, Sensor |

| Implementing a Capsule | USB Security Key |

Tock as a Hardware Root of Trust (HWRoT) Operating Sytsem

This module and submodules will walk you through setting up Tock as an operating system capable of providing the software backbone of a hardware root of trust, placing emphasis on why Tock is well-suited for this role.

Background

A hardware root of trust (HWRoT) is a security-hardened device that provides the foundation of trust for another computing system. Systems such as mobile phones, servers, and industrial control systems run code and access data that needs to be trusted, and if compromised, could have severe negative impacts. HWRoTs serve to ensure the trustworthiness of the code and data their system uses to prevent these outcomes.

HWRoTs can come in two flavors: discrete and integrated.

-

Discrete: a discrete HWRoT is an individually-packaged system on chip (SoC), and communicates over one or more external buses to provide functionality.

-

Integrated: an integrated HWRoT is integrated into a SoC, and communicates over one or more internal buses to provide functionality to the rest of the SoC it resides in.

Some notable examples of HWRoTs include:

- The general-purpose, open-source OpenTitan HWRoT which comes in the discrete Earl Grey design as well as the Darjeeling integrated design

- Apple's Secure Enclave, the integrated root of trust in iPhone mobile processors

- Google's Titan Security Module, the discrete root of trust in Google Pixel phones

- Hewlett Packard Enterprise's Silicon Root of Trust, the integrated root of trust in their out-of-band management chip

- Microsoft's Pluton Security Processor, the integrated root of trust integrated into many of its silicon collaborators' processors (Intel, AMD, etc.)

- Arm's TrustZone, the general-purpose integrated HWRoT provided in some Cortex-M and Cortex-A processors

- Infineon's SLE78 Secure Element, which is used in YubiKey 5 series USB security keys

In practice, hardware roots of trust are essential for providing support for all kinds of operations, including:

-

Application-level cryptography: While any processor can be used to perform cryptographic operations, doing so on a non-hardened processor (i.e. one that can't prevent physical attacks) can result in side-channel leaks or vulnerability to fault injection attacks, allowing attackers to uncover secrets by measuring execution time or power consumption of the chip, or by triggering incorrect execution paths, respectively. HWRoTs are specifically designed to prevent such issues.

-

Key management: Similarly, cryptographic keys stored in memory can be leaked using invasive attacks on a chip--a secure element can instead store the keys and use them without them ever being copied to memory. Smaller HWRoTs focused on providing hardware cryptographic operations and secure key storage are often called secure elements.

-

Secure boot: In many systems, it is critical to ensure that code the processor runs hasn't been tampered with by an attacker. Secure boot allows for this by having multiple boot stages, where each stage verifies a digital signature on the next to verify integrity. The bottom-most boot stage is immutable (usually a ROM image baked into the chip design itself). In security-critical systems, the first boot stage is the HWRoT's ROM, which then boots the rest of the RoT, and finally the main processor of the system.

-

Hardware attestation: Often with internet-connected devices, it's important for a server to be able to verify that it's connected to a valid, uncompromised device before transferring data back-and-forth. During boot, each boot stage of a device with a HWRoT can generate and sign certificates attesting to the hash of the next boot stage's value. Each of these certificates establishes a conditional trust, demonstrating that a given stage is uncompromised, provided that all previous stages are also uncompromised. Together, these certificates form a trust ladder the server can review, verifying that the expected hash values were reported all the way back to the HWRoT ROM.

-

Device firmware updates (DFU): Device firmware updates can be a major threat vector to a device, as a vulnerability in the target device's ability to verify authenticity of an update can allow for an attacker to achieve remote code execution. By delegating device firmware updates to a HWRoT, which can verify the signature and update flash in a tamper-free way on its own, the process of DFU can be made significantly less risky.

-

Drive encryption: Similarly, drive encryption can be performed using a HWRoT to avoid attackers tampering with the drive encryption process and compromising the confidentiality of user data.

Hardware Notes

For accessibility, we will use a standard microcontroller in this demo rather than an actual hardware root of trust; that said, the principles in this demo apply readily to any HWRoT.

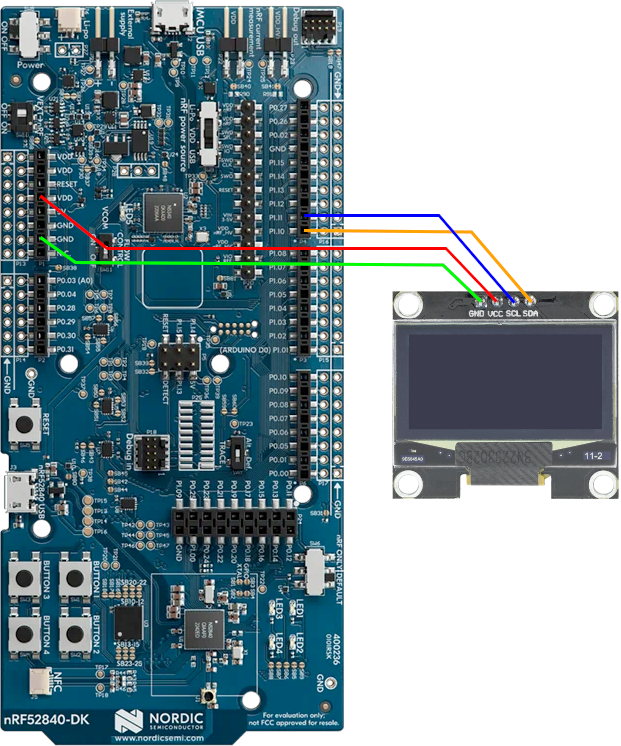

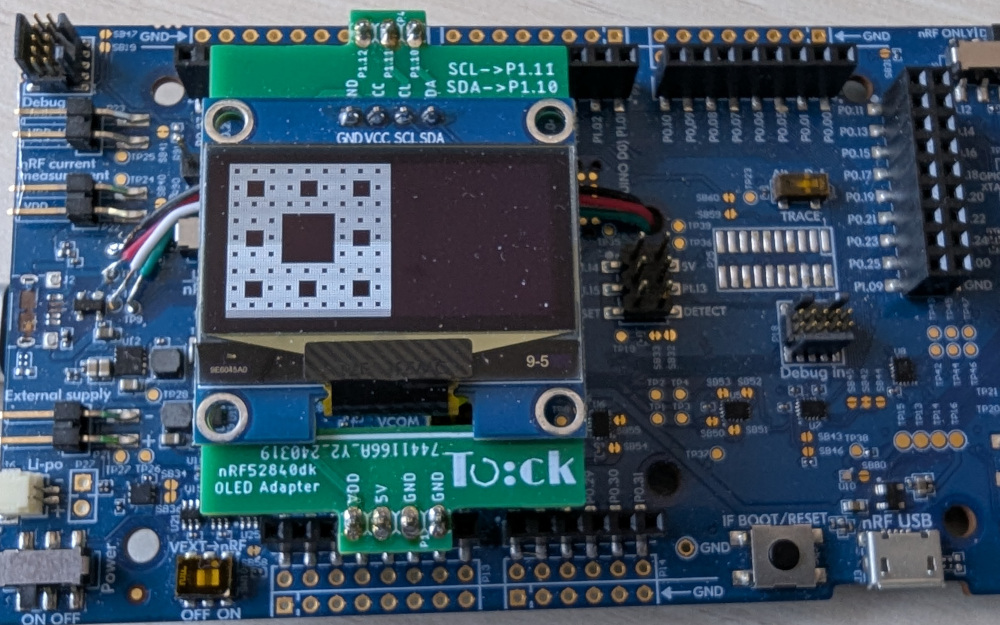

This guide was designed for the nRF52840dk with an attached screen. However, other hardware will work but will need some additional configuration to match the directions presented here.

Goal

Our goal is to build a simple encryption service, which we'll mount several attacks against, and demonstrate how Tock protects against them.

Along the way, we'll also cover foundational Tock concepts to give a top-level view of the OS as a whole.

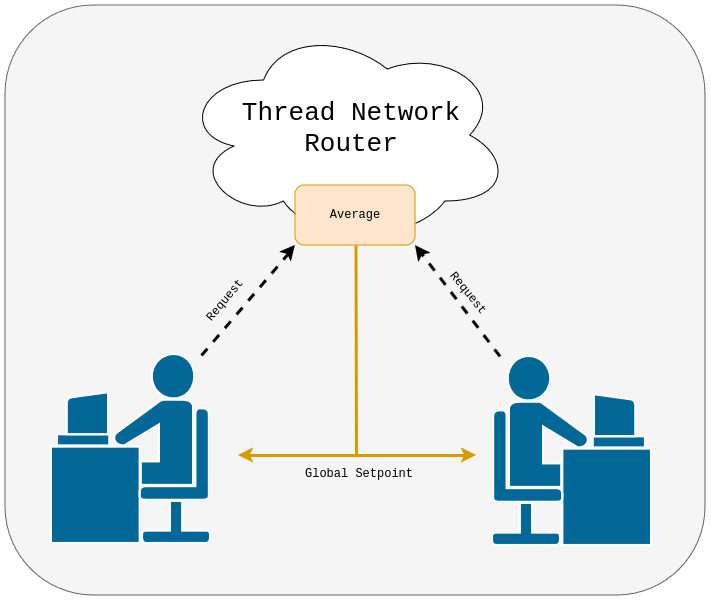

nRF52840dk Hardware Setup

Before beginning, check the following configurations on your nRF52840dk board.

- The

Powerswitch on the top left should be set toOn. - The

nRF power sourceswitch in the top middle of the board should be set toVDD. - The

nRF ONLY | DEFAULTswitch on the bottom right should be set toDEFAULT.

You should plug one USB cable into the top of the board for both programming and

communication, into the port labeled MCU USB on the short side of the board.

Kernel Setup

This tutorial requires a Tock kernel configured with two specific capsules instantiated that may not be included by default with a particular kernel:

The easiest way to get a kernel image with these installed is to use the Hardware Root of Trust tutorial configuration for the nRF52840dk.

cd tock/boards/tutorials/nrf52840dk-root-of-trust-tutorial

make install

However, you can also follow the guides to setup these capsules yourself in a different kernel setup or for a different board.

Organization and Getting Oriented to Tock

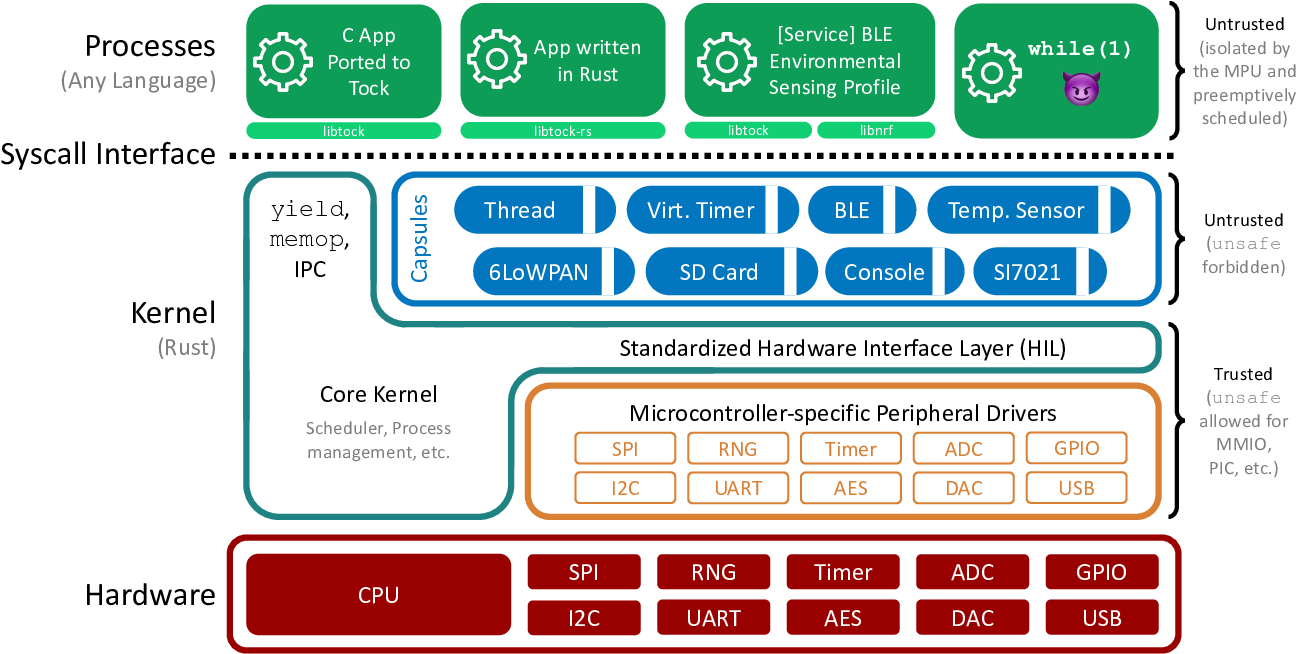

To get started, we briefly describe the general structure of Tock and will deep-dive into these components throughout the tutorial:

A Tock system contains primarily two components:

- The Tock kernel, which runs as the operating system on the board. This is compiled from the Tock repository.

- Userspace applications, which run as processes and are compiled and loaded separately from the kernel.

The Tock kernel is compiled specifically for a particular hardware device,

termed a board. Tock provides a set of reference board files under

/boards/<board name>. Any

time you need to compile the kernel or edit the board file, you will go to that

folder. You also install the kernel on the hardware board from that directory.

While the Tock kernel is written entirely in Rust, it supports userspace applications written in multiple languages. In particular, the Tock community maintains two userspace libraries for application development in C and Rust respectively:

libtock-cfor C applications ( tock/libtock-c )libtock-rsfor Rust applications ( tock/libtock-rs )

We will use libtock-c in this tutorial. Its example applications are located

in the /examples

directory of the libtock-c repository.

Stages

This module is broken into three stages:

- Creating a simple encryption service

- Preventing attacks at runtime with the MPU

- Preventing attacks at compile-time with Tock's isolation guarantees

Encryption Service Userspace Application

This submodule guides you through creating and using an encryption service. Providing an encryption service is a common feature of a HWRoT.

An encryption service can cryptographically encrypt and/or decrypt data on behalf of an application. Crucially, the service encapsulates the encryption key, preventing the application from ever having access to the key. This also prevents an attacker from being able to retrieve the key, enhancing the security of the encrypted data.

Once we setup the encryption service, we will use it throughout this course to demonstrate Tock's strengths as a hardware root of trust OS.

Background

Secure Elements as Roots of Trust

Recall from the overview that a secure element is a purpose-built chip used for key storage and encryption purposes, often in support of a main processor which needs to perform some kind of cryptography.

In a real-world setting, a secure element like the Infineon SLE78 (the chip used in the YubiKey 5 series) might communicate over a standard device-internal bus like SPI or I2C, or might even communicate directly with a host over USB.

Often, smaller secure elements like the SLE78 will receive commands and deliver responses encoded as application protocol data units (APDUs), a holdover from the smart card industry.

While we could replicate this behavior for our encryption service by passing APDUs back and forth over USB, we elide this complexity for the sake of simplicity and just prompt the user for plaintext to encrypt via Tock's console.

Hardware-backed Keys

In an actual hardware root of trust, the AES key in the encryption oracle would be hardware-backed, i.e. it would be generated and kept in a hardware key store apart from where the processor could directly access it.

While the nRF5x AES peripheral doesn't have support for hardware-backed keys, it does allow us to store our AES128 key in the encryption oracle driver and load it into the AES128 peripheral as needed; this is almost as secure, and in any case the difference is invisible to the userspace application which can't access the key either way.

Applications in Tock

For readers who have previously written embedded software, it's important to note that Tock applications are written in a manner much more similar to traditional, non-embedded software. They are compiled separately from the kernel and loaded separately onto the hardware. They can be started or stopped individually and can be removed from the hardware individually. Moreover, the kernel decides which applications to run and what permissions they should be given.

Applications make requests to the OS kernel through system calls. Applications

instruct the kernel using Command system calls, and the kernel notifies

applications with upcalls the application must subscribe to. Importantly,

upcalls never interrupt a running application. The application must yield to

receive upcalls (i.e. callbacks).

The userspace library (libtock) wraps system calls in easier to use functions.

The libtock library is completely asynchronous. Synchronous APIs to the system

calls are in libtock-sync. These functions include the call to yield and

expose a synchronous driver interface. Application code can use either.

Tock Allows and Upcalls

When interacting with drivers in Tock, it's important to note that by design, any driver can only access the data you explicitly allow it access to. In Tock, an allow is a buffer shared from a userspace application to a specified driver. These can be read-only, where the driver can only read what the app supplies in the buffer, or read-write, where the driver can also modify the buffer to e.g. write results.

In order to easily allow asynchronous driver interfaces, the Tock driver allows registering upcalls, callbacks which kernel drivers can invoke e.g. to signal to an app that a requested operation has completed.

Inter-process Communication (IPC) in Tock

Tock has an IPC driver in the kernel which allows userspace apps to advertise

IPC services with names such as org.tockos.tutorial.led_service.

Applications that want to make requests over IPC can use the ipc_discover()

function with an IPC service name to fetch the application ID of the app hosting

the service. After this is done, the requesting app can register callbacks,

allow access to shared buffers, and finally notify the IPC service to perform

some operation.

Submodule Overview

We will be developing two userspace applications. The first provides a user interface with the screen and buttons. The second is the encryption service application. An overview of the structure is here:

┌──────────────────┐ ┌────────────────────┐

│ │ │ │

│ Screen App │ IPC │ Encryption Service │

│ │◄─────►│ App │

│ UI + Logging │ │ │

│ │ │ │

└───┬───────┬──────┘ └──┬───────────┬─────┘

Userspace │ │ │ │

─────────────────┼───────┼─────────────────┼───────────┼──────

Tock ┌──▼───┐┌──▼────┐ ┌─────▼────────┐┌─▼─────┐

Kernel │Screen││Buttons│ │AES Encryption││Console│

└──────┘└───────┘ │Oracle │└───────┘

└──────────────┘

The two applications will communicate using IPC. We will focus on creating the encryption service app, and use the screen app to help interact with the user.

Each application uses capsules provided by the kernel. The capsules have already been created and are already included with the kernel we installed. Consistent with our goal for an HWRoT encryption service, the AES Encryption Oracle has a built-in AES key that we can use to encrypt messages without our userspace application ever making contact with the key itself.

Milestones

We have three small milestones in this section, all of which build upon supplied starter code.

- Milestone one adds support for interfacing with a dispatch/logging service, to illustrate how various root of trust services might be dispatched in practice while maintaining separation.

- Milestone two adds support for sending/receiving data and serializing plaintext, introducing how libtock-c APIs work.

- Milestone three adds actual encryption support using the encryption oracle driver, demonstrating how the Tock syscall interface.

Setup

Before starting, check the following:

-

Make sure you have compiled and installed the Tock kernel with the screen and encryption oracle drivers on to your board.

cd tock/boards/tutorials/nrf52840dk-root-of-trust-tutorial make install -

Make sure you have no testing apps installed. To remove all apps:

tockloader erase-apps

Starter Code

We'll start with the starter code, which includes a logging application for displaying encryption service logs to the OLED screen, as well as a scaffold for developing the remainder of the encryption service userspace app.

-

Inside your copy of

libtock-c, navigate tolibtock-c/examples/tutorials/root_of_trust/.This contains the starter code which you'll work from in the following steps. For now, all this application does is present a list of services that the root of trust can provide, and allows you to select one to interact with.

-

Compile the screen application and load it onto your board. In the

screen/subdirectory, runmake install -

Next, navigate to the

encrypt_service/subdirectory in the same parent folder and load it as well by again runningmake install -

After both applications are loaded, you should see a screen which should allow you to select a service to dispatch. You can navigate up and down in the menu by using

BUTTON 1andBUTTON 3on the nRF52840dk board, and you can select an option by pressingBUTTON 4and then clickingStart.Note that right now, the encryption service doesn't have any code to react to requests for dispatch, so if you select it nothing will happen.

The source code for the screen application is in screen/main.c. If you dig

through it, you'll find logic for

- displaying the menu to select an application,

- requesting a root of trust service to be dispatched on select

- listening for logging requests to display

The macros for generating a menu using the u8g2 user interface library are a

bit obtuse at first, so they (along with the rest of the file) have been

commented thoroughly.

Milestone One: Connecting to the Main Screen App

To begin, we first want our encryption service to be able to (a) respond when the main screen app signals it to take over control of the UART console, and (b) connect to the logging service the main screen app provides for displaying logs to the screen.

From a functionality standpoint, we certainly could have service dispatch, all of the desired cryptographic services, and logging functionality in one event loop in an application; however, when using Tock, separating these functionalities into different apps is helpful from a security perspective. We'll discuss this more in the next part of the tutorial.

First, let's modify our scaffold in encryption_service_starter/main.c to

respond to the main screen app's dispatch signal using Tock's inter-process

communication (IPC) driver. Rename the directory in your local copy from

encryption_service_starter/ to encryption_service/. Completed code is

available in encryption_service_milestone_one/ if you run into issues.

-

Take a look in

screen/main.cat theselect_rot_service()function. This function, called bymain(), takes in the name of an IPC service hosted e.g. by our encryption service app and- calls

ipc_discover()to go from the IPC service name to the ID of the process hosting that IPC service - calls

ipc_register_service_callback()to register a logging IPC service underorg.tockos.tutorials.root_of_trust.screen, so that the selected root of trust service can log to screen - calls

ipc_notify_service()to trigger the IPC service of the process whose IDipc_process()found

All of these IPC API functions are provided by the

libtock/kernel/ipc.hheader included at the top of the file. - calls

-

To start, open

encryption_service/main.cand create await_for_start()function which registers an IPC callback under the service nameorg.tockos.tutorials.root_of_trust.encryption_serviceand then yields until that callback is triggered by the main screen app.- You'll want to use the IPC function

ipc_register_service_callback()to register your callback function. See the documentation there for how the signature of your callback function should look. - The callback function you write should set a global

boolfrom false to true.wait_for_start()can then use theyield_forfunction to wait for this change in state.

- You'll want to use the IPC function

-

Now, call

wait_for_start()inmain(), and follow it with a call toprintf()to send a message to the UART console; this should indicate when your app has been selected. -

To test this out, build and install your application as previous, then run

tockloader listenin a separate terminal. When you select the encryption service and hitStartin the menu, you should see your message in the console (not on the screen).TIP: You can leave the console running, even when compiling and uploading new applications. It's worth opening a second terminal and leaving

tockloader listenalways running.

Next, we need to connect our application back to the screen logging IPC service. To do this,

-

Again in

encryption_service/main.c, create a newsetup_logging()function which takes in a message string and sends it via IPC to the logging service to display.-

In the

log_to_screen()function, you'll want to useipc_discover()to discover the process ID for the logging service,ipc_register_client_callback()to provide a callback that sets a global flag to indicate a completed log, andipc_share()to share a buffer to the logging service. -

When creating the log buffer to share over IPC, which can store as long of a message as will fit on the OLED screen. 32 bytes should be sufficient. Make sure that the buffer is marked with the

alignedattribute. i.e.char log_buffer[LOG_WIDTH] __attribute__((aligned(LOG_WIDTH)));

-

-

Next, create a new

log_to_screen()function which takes in a null-terminated message string and sends it via IPC to the logging service to display.- To trigger the logging service to fetch your message string from the shared

buffer, you'll want to use

ipc_notify().

- To trigger the logging service to fetch your message string from the shared

buffer, you'll want to use

-

To test your implementation, add calls to

wait_for_start()andsetup_logging()tomain(), and follow them with some calls tolog_to_screen().- To test your implementation, recompile and re-install your encryption app and then use the on-device menu to start the encryption service.

Checkpoint: Your application should now be able to receive encryption requests over the UART console, and log these requests over IPC to the screen.

Milestone Two: Sending/Receiving Data and Serializing Plaintext

Now that we can interact with the main screen app over IPC, we should set up the UART console to allow inputting secrets to encrypt, and make sure that we can encode the resulting ciphertext as hex to present back to the user.

In a practical HWRoT setting, it may be inadvisable to send secret values to a

device in the clear where they could be intercepted. For instance, smart cards

and smaller secure elements often make use of GlobalPlatform's Secure Channel

Protocols such as

Secure Channel Protocol 03

to establish an encrypted, authenticated channel before exchanging any secret

information.

For brevity, we won't implement a full secure channel in this protocol, but at the end of this section we include a challenge in this vein for after the tutorial is complete.

To start, let's retrieve the secret from the user over UART to parse. Completed

code is available in encryption_service_milestone_two/ if you run into issues.

-

In

encryption_service/main.c, create arequest_plaintext()function which prompts a user over UART for plaintext into a provided buffer with a provided size.-

To prompt the user, you'll want to use

libtocksync_console_write()with a message like"Enter a secret to encrypt:". -

For fetching a response, you'll want to use

libtocksync_console_read()to read bytes one-by-one, breaking when you hit a newline (\nor\r). You'll also want to use this function to strip leading whitespace from the user's input. -

Make sure to echo each character as it's received by writing it back, or else the user won't be able to see their input.

-

For convenience later, return the size of the input.

-

-

Next, we'll add a function

bytes_to_hex()which inputs a byte buffer and length, and outputs a null-terminated hex string.- When writing this function, the most direct way to convert a byte to hex is

with

sprintf: you can use"%02X"as a format string.

- When writing this function, the most direct way to convert a byte to hex is

with

-

To test both of these functions in concert, modify

mainto, in a loop, input plaintext over the console, convert it to hex, and then report it to the screen.

Checkpoint: Your application should now be able to input messages via the UART interface and report byte values as hex.

Milestone Three: Adding Encryption Support

Finally, we want to actually encrypt our messages before we report them.

NOTE: If you've completed the HOTP tutorial prior, the same implementation of

oracle.cthere will work--feel free to simply copy it over fromencryption_service_milestone_three/if you've already implemented it before.

We first create a new file to house our interface to the encryption oracle

driver, then integrate it into main:

-

Create a header file

oracle.hinencryption_service/with the following prototype (don't forget to#include <stdint.h>!):int oracle_encrypt(const uint8_t* plaintext, int plaintext_len, uint8_t* output, int output_len, uint8_t iv[16]); -

Create a source file

oracle.cnext tooracle.hwith an implementation of this function, using the encryption oracle to encryptplaintextand placing the result inoutput_len. Theivbuffer should be used to return the randomized initialization vector generated for encryption.-

To randomize the IV, you'll want to use

libtocksync_rng_get_random_bytes(). -

The current kernel configuration has the ID for the encryption capsule as

0x99999, which you'll pass to each command that targets it. -

From there, the driver requires three allows to operate; you'll want to use

allow_readonly()andallow_readwrite()to set them up.- A read-only allow with ID 0 for sending the input plaintext

- A read-only allow with ID 1 for sending the input IV

- A read-write allow with ID 0 for receiving the output ciphertext

-

Next, you'll need to set up an upcall to confirm when the encryption is done. You'll want the signature of your upcall to look like

static void crypt_upcall(__attribute__((unused)) int num, int len, __attribute__ ((unused)) int arg2, __attribute__ ((unused)) void* ud);and it should both set a global flag indicating that the upcall is done, as well as store the ciphertext length passed to it in a global variable.

-

Finally, you'll need to send a command to the driver with command ID 1 to trigger the start, after which you should

yield_for()until the upcall completes and reset thedoneflag for the next call. The return value of the function should be the length returned from the upcall.

-

-

Finally, let's wire it all together. Go back to

main()inmain.c, and make it do the following:- Wait for the start signal for the encryption service (

wait_for_start()) - Set up the IPC logging interface (

setup_logging()) - Looping forever (using

log_to_screento indicate each step is happening):- Request a plaintext from the user (

request_plaintext()), from a plaintext buffer and to ciphertext buffer both of size512(four AES-128 blocks) - Encrypt the plaintext (

oracle_encrypt(), fromoracle.c) - Convert the ciphertext to a hex string (

bytes_to_hex()) - Dump the ciphertext to the console (e.g. with

printf("Ciphertext: %s\n", ...))

- Request a plaintext from the user (

- Wait for the start signal for the encryption service (

When you run the test now, you should be able to use tockloader listen and

type messages into the UART console when prompted to encrypt them.

Checkpoint: Your application should now be able to encrypt arbitrary messages sent over the UART console, logging the status of the encryption capsule to the screen as it runs.

Submodule Complete

Congratulations! Feel free to move on to the next section, where we'll begin to attack our implementation and show how Tock allows for defense-in-depth measures appropriate for a root of trust operating system.

If you have additional time or are looking to deepen your knowledge of Tock, continue to challenge section below.

Challenge: Authenticating the Results

NOTE: This challenge is open-ended, may take a while, and requires experience working on Tock drivers--it's best approached after completing the remainder of the tutorial. We'll touch on Tock drivers later in this tutorial, but you can also follow the HOTP tutorial for additional practice if you'd like.

As mentioned earlier, communication channels with a HWRoT are often encrypted and authenticated. The former provides confidentiality so that secrets can't be extracted by eavesdroppers; meanwhile, the latter provides authenticity of results so attackers can't impersonate either party.

While designing a secure channel is a surprisingly tricky task, many existing frameworks exist, e.g. the popular Noise Protocol Framework used by many projects including the well-known WireGuard VPN. As a step in this direction, the challenge described here is to just provide authentication using ECDSA signatures for the ciphertexts that the root of trust produces, so that a client of the encryption service can be sure that the results they receive came from our root of trust.

Here is an outline for how one might go about doing so--these steps are intentionally a bit vague, as this is intended more to serve as a longer-term practice than something that can be done in the timeframe of an in-person tutorial:

-

You'll want to first add a signing oracle driver. While the nRF52840dk board used for this tutorial lacks ECDSA hardware support[^1], Tock provides an

ecdsa-swdriver which wraps RustCrypto's signing and verifying implementations to provide software support.-

The actual structure you will want to use is the

EcdsaP256SignatureSignerincapsules/ecdsa_sw/src/p256_signer.rs. This struct implements thepublic_key_crypto::SignatureSignhardware interface layer (HIL) trait, so you can use itssign()method to sign messages and itsset_sign_client()to designate a callback for when a signing operation is completed. Thepublic_key_crypto::SetKeyHIL will similarly allow you to change the key the signer uses. -

You can base your work off the encryption oracle implementation in

capusles/extra/tutorials/encryption_oracle_chkpt5.rs. Most of the logic for tracking driver state should remain the same, but instead of the driver struct containing an instance of an AES struct used encrypt, your driver struct will contain anEcdsaP256SignatureSignerused to sign.

-

-

Next, you'll want to create a board definition based off the one in

boards/nordic/nrf52840dk/src/main.rswhich instantiates aEcdsaP256SignatureSignerand your signing oracle driver, passing the former to the latter on creation.- For an example this struct in use, see the ECDSA test capsule in

capsules/ecdsa_sw/src/test/p256.rsas well as the test board configuration inboards/configurations/nrf52840dk/nrf52840dk-test-kernel/src/test/ecdsa_p256_test.rswhich depends on it.

- For an example this struct in use, see the ECDSA test capsule in

-

Finally, you'll want to create a new userspace interface to this driver akin to that in

encryption_service/oracle.c. The resulting file should be almost identical, but of course with functions accepting messages to sign instead of secrets to encrypt, etc.

Even if you don't complete all these steps, hopefully reviewing the above outline should give a good picture of how you can go from an idea of a driver you need for an application to a full implementation and integration into a userspace app.

[^1] This is almost true: the nRF52840 chip contains the closed-source ARM TrustZone CryptoCell 310, which has support for ECDSA signatures, but sadly there's not driver support for it yet (due to its closed-source nature).

Userspace Attacks on the Encryption Service

Now that the userspace application has been completed, we can attempt to perform some attacks on it and demonstrate how Tock stops them.

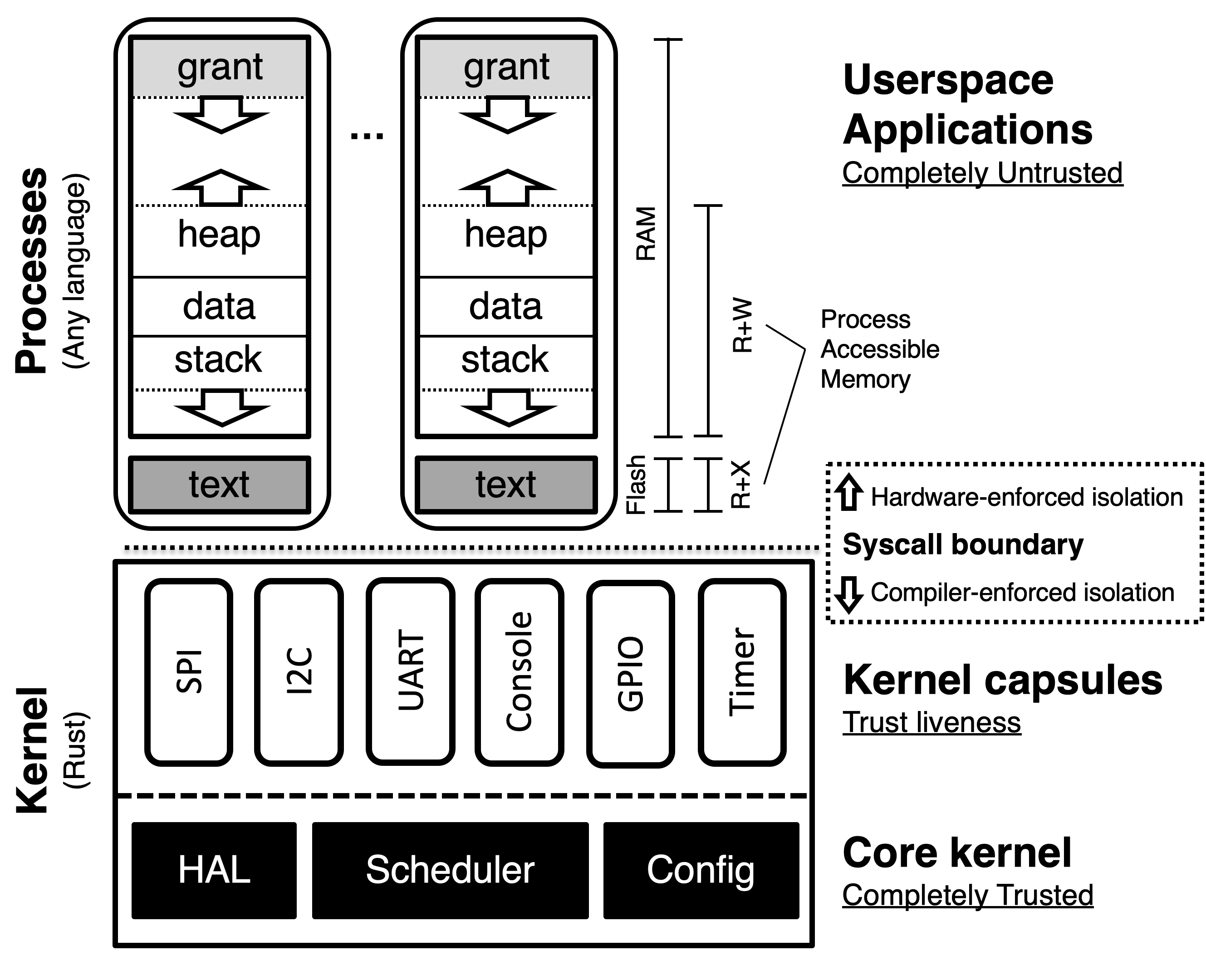

The primary theme we'll see is that Tock takes a two-fold approach to security though isolation; for everything above the syscall layer, isolation between apps is guaranteed at runtime using hardware protection, whereas below the syscall layer, isolation between components is guaranteed at compile-time via careful use of Rust's type system. This allows for the flexibility of dynamically loading applications while simultaneously providing the highest degree of security for the kernel: if isolation is violated, the kernel simply won't build.

In this submodule, we'll explore the runtime isolation between applications, and in the next we'll dive into the kernel to see an example of compile-time isolation in the kernel.

Background

Memory Protection in Embedded Systems

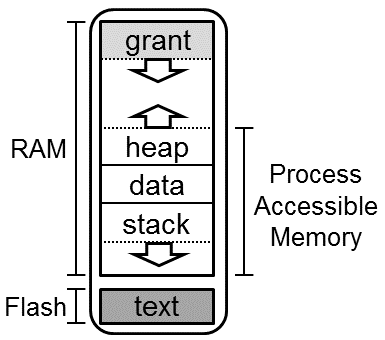

In non-embedded contexts, a CPU will usually have a memory management unit (MMU) which handles all memory accesses to memory pages. Embedded contexts usually don't provide enough memory to justify paging, so a much simpler piece of hardware--the memory protection unit (MPU)--is used instead.

The MPU's sole job is to maintain a series of contiguous memory regions which

the processor should currently be allowed to access to provide hardware-level

runtime isolation guarantees. For instance, when an application is running, the

MPU typically allows the processor read-execute access to the .text section of

the application and read-write access to the corresponding section in SRAM. Each

context switch in Tock is paired with a reconfiguration of the MPU to ensure

that out-of-bounds accesses don't allow applications to access memory belonging

to the kernel or other applications.

Submodule Overview

We have two small milestones in this section, one of which builds upon supplied starter code for an application, and the other of which builds upon the board definition we've been using for our kernel this whole time.

- Milestone one adds an application which attempts to dump its own memory, followed by the memory of the encryption oracle application--we use this as an example of a potential userspace attack on a root of trust.

- Milestone two customizes Tock's response to this attack at the board definition level.

Starter Code

The starter code for the userspace attack application is in the

suspicious_service_starter/ subdirectory.

To launch this 'suspicious' service which we'll use to dump userspace memory,

simply navigate as per the previous submodule to the Suspicious service in the

on-device menu, select it, and then select Start as usual.

Milestone One: Attempting to Dump Memory from SRAM

To start, we first need to set up our attack application to attempt to dump

memory from SRAM. If you get stuck, an implementation of this milestone is

available at suspicious_service_milestone_one/.

-

In

libtock-c/examples/tutorials/root_of_trust/, rename the directorysuspicious_service_starter/to justsuspicious_service/. -

Inside

suspicious_service/main.c, we'll want to start by adding everything we needed from the previous step for interacting with the main screen application. Copy overwait_for_start(),setup_logging(), andlog_to_screen()along with the IPC callbacks and global variables they rely upon from the previous submodule tosuspicious_serivce/main.c. -

Now we will dump the contents of the suspicious service's memory. In

suspicious_service/main.c, add a functiondump_memory()that takes in auint32_t *startword pointer, auint32_t *endword pointer, and a label string, and loops over each address from start to end while printing out over UART e.g.[<LABEL>] <address>: <value>to show the value at each memory address. -

In main, call

dump_memory()to dump the first 16KiB (0x1000words of memory) of thesuspiciousSRAM dumping service you're modifying right now.To get the address that our SRAM dumping application's memory starts at, Tock supplies a

Memopclass of syscalls we can use, which are nicely wrapped in utility functions such astock_app_memory_begins_at()in libtock-c.If desired, use

log_to_screen()to log when the memory dump starts/stops. You should (when selecting theSuspicious servicein the on-device menu) successfully be able to retrieve the bytes of code of the running SRAM dumping service.

Checkpoint: Your suspicious service application should be able to dump its own memory contents.

Now that we've established an app can dump its own memory, can it also dump the memory from a different application? Intuitively, processes shouldn't be able to read memory from other apps. However, embedded systems often do not provide the same isolation that we expect on servers, desktops, and phones. Let's try it to see what happens.

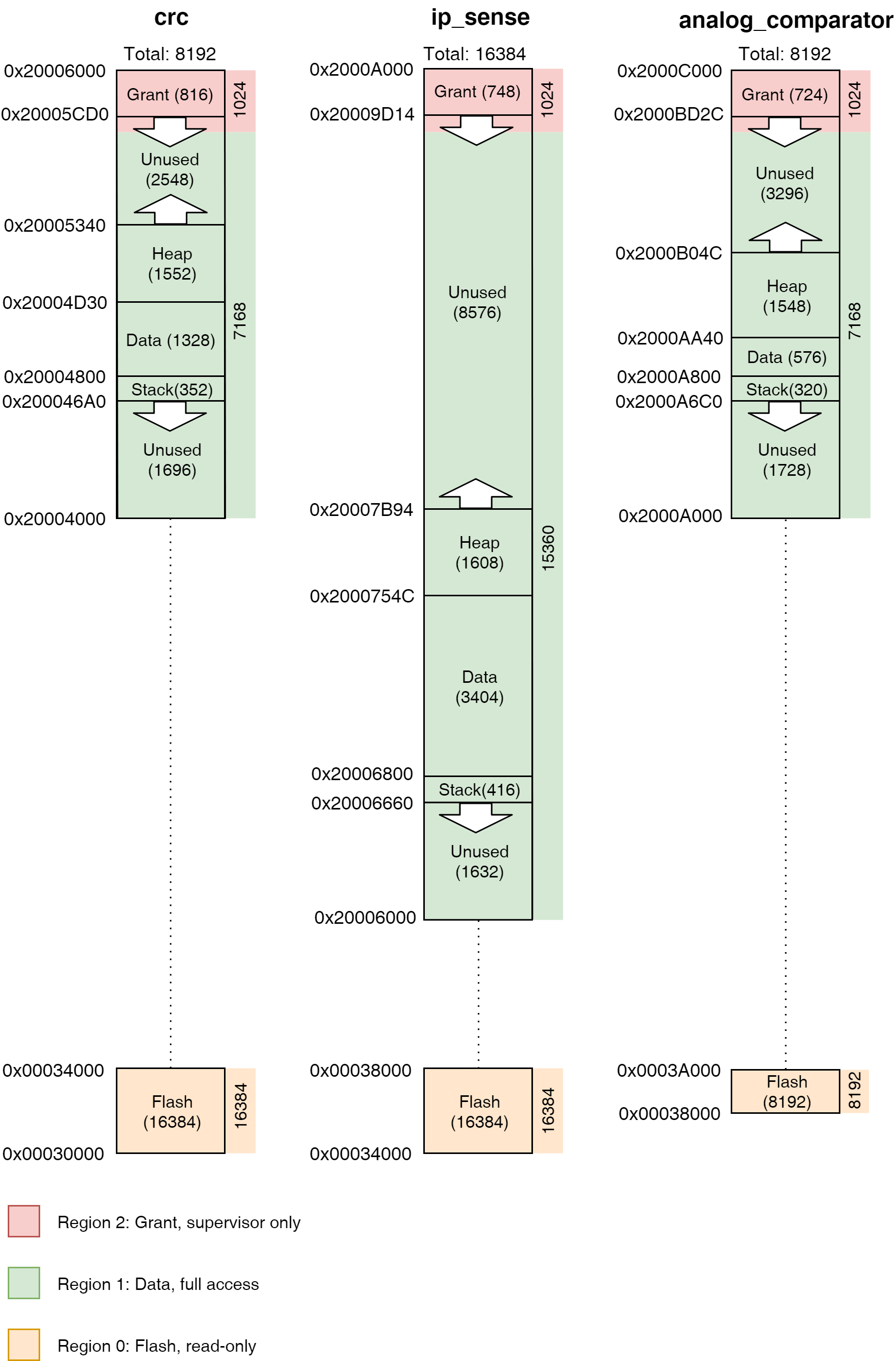

-

We first want to obtain the addresses in memory the kernel allocated for the encryption service we installed previously. To do this, we'll rebuild the kernel and add an additional feature. Under our board definition in

tock/boards/tutorials nrf52840dk-root-of-trust-tutorial/, you'll want to make the following change so that the process load debugging is enabled for the kernel:--- a/boards/tutorials/nrf52840dk-root-of-trust-tutorial/Cargo.toml +++ b/boards/tutorials/nrf52840dk-root-of-trust-tutorial/Cargo.toml @@ -10,7 +10,7 @@ build = "../../build.rs" edition.workspace = true [features] -default = ["screen_ssd1306"] +default = ["screen_ssd1306", "kernel/debug_load_processes"] screen_ssd1306 = [] screen_sh1106 = [] -

After making this change, run (in the same directory)

make installso that the new kernel is installed. From there, press theRESETbutton on the board, and look at yourtockloader listenconsole; it should show something like[INFO ] Using "/dev/ttyACM0 - J-Link - CDC". [INFO ] Listening for serial output. Initialization complete. Entering main loop NRF52 HW INFO: Variant: AAF0, Part: N52840, Package: QI, Ram: K256, Flash: K1024 ... Loading: org.tockos.tutorials.attestation.encryption [1] flash=0x00048000-0x0004C000 ram=0x2000A000-0x2000BFFF ... No more processes to load: Could not find TBF header.These debug statements are from Tock's application loader. Reviewing the line starting with

Loading:, we can clearly see that the SRAM range for the encryption service is0x2000A000-0x2000BFFF. -

After that, try adding another

dump_memory()call to dump the first0x1000words of the encryption application. Because applications should never need to access each other's memory, you'll need to get this address from the debug output you collected in step 3. Once you've done this, your code should compile fine, but when you check thetockloader listenUART console, you should see a fault dump.

With any luck, the fault dump you receive should look something like this:

...

---| Cortex-M Fault Status |---

Data Access Violation: true

Forced Hard Fault: true

Faulting Memory Address: 0x2000A000

...

𝐀𝐩𝐩: org.tockos.tutorials.attestation.suspicious - [Faulted]

Events Queued: 0 Syscall Count: 4118 Dropped Upcall Count: 0

Restart Count: 0

Last Syscall: Yield { which: 1, param_a: 0, param_b: 0 }

Completion Code: None

╔═══════════╤══════════════════════════════════════════╗

║ Address │ Region Name Used | Allocated (bytes) ║

╚0x2000E000═╪══════════════════════════════════════════╝

│ Grant Ptrs 120

│ Upcalls 320

│ Process 768

0x2000DB48 ┼───────────────────────────────────────────

│ ▼ Grant 216

0x2000DA70 ┼───────────────────────────────────────────

│ Unused

0x2000CF3C ┼───────────────────────────────────────────

│ ▲ Heap 1468 | 4336 S

0x2000C980 ┼─────────────────────────────────────────── R

│ Data 384 | 384 A

0x2000C800 ┼─────────────────────────────────────────── M

│ ▼ Stack 416 | 2048

0x2000C660 ┼───────────────────────────────────────────

│ Unused

0x2000C000 ┴───────────────────────────────────────────

.....

0x00050000 ┬─────────────────────────────────────────── F

│ App Flash 16272 L

0x0004C070 ┼─────────────────────────────────────────── A

│ Protected 112 S

0x0004C000 ┴─────────────────────────────────────────── H

...

Indeed, when we tried to access the first address from the encryption service's SRAM, the nRF52840's MPU triggered a hard fault, which passed control to Tock's hard fault handler and allowed Tock to halt the application.

If we look even further down the debug dump, we can actually even see the MPU configuration Tock set up at the time of the fault:

Cortex-M MPU

Region 0: [0x2000C000:0x2000D000], length: 4096 bytes; ReadWrite (0x3)

Sub-region 0: [0x2000C000:0x2000C200], Enabled

Sub-region 1: [0x2000C200:0x2000C400], Enabled

Sub-region 2: [0x2000C400:0x2000C600], Enabled

Sub-region 3: [0x2000C600:0x2000C800], Enabled

Sub-region 4: [0x2000C800:0x2000CA00], Enabled

Sub-region 5: [0x2000CA00:0x2000CC00], Enabled

Sub-region 6: [0x2000CC00:0x2000CE00], Enabled

Sub-region 7: [0x2000CE00:0x2000D000], Enabled

Region 1: Unused

Region 2: [0x0004C000:0x00050000], length: 16384 bytes; UnprivilegedReadOnly (0x2)

Sub-region 0: [0x0004C000:0x0004C800], Enabled

Sub-region 1: [0x0004C800:0x0004D000], Enabled

Sub-region 2: [0x0004D000:0x0004D800], Enabled

Sub-region 3: [0x0004D800:0x0004E000], Enabled

Sub-region 4: [0x0004E000:0x0004E800], Enabled

Sub-region 5: [0x0004E800:0x0004F000], Enabled

Sub-region 6: [0x0004F000:0x0004F800], Enabled

Sub-region 7: [0x0004F800:0x00050000], Enabled

Region 3: Unused

Region 4: Unused

Region 5: Unused

Region 6: Unused

Region 7: Unused

This indicates that our application had read-write access to the range

0x2000C000 - 0x2000D000 (the SRAM dump application's allotted SRAM) and

read-only access to the range 0x0004F800 - 0x00050000 (the SRAM dump

application's code) but that an attempted access to any other region of memory

would result in a hard fault, as we just saw. As such, even when the kernel is

configured to accept arbitrary applications, runtime application isolation can

be enforced.

Checkpoint: Your suspicious service application was blocked from reading the memory of a different application!

Milestone Two: Modifying our Kernel's Fault Policy

When a system faults, it can be very useful to dump as much information as possible to the console to aid in debugging. In some secure production contexts though, it might be useful to keep dumped information on system state to a minimum. As an example of this in Tock, we will modify the kernel's application fault policy to provide less information while still alerting the user that an application has faulted, keeping the more potentially sensitive details like MPU configurations for e.g. protected logs.

To start, we'll need to open up our board definition in Tock:

-

Open

tock/boards/tutorials/nrf52840dk-root-of-trust-tutorial/main.rs, and note where it says#![allow(unused)] fn main() { const FAULT_RESPONSE: capsules_system::process_policies::PanicFaultPolicy = capsules_system::process_policies::PanicFaultPolicy {}; }This is the part of the board configuration file that indicates how the Tock kernel should respond to an application faulting. The

PanicFaultPolicysimply causes the whole system to panic upon an application faulting; while this is helpful for debugging, it does allow malicious applications to deny service in production and can reveal information regarding MPU configuration that might be best kept to device logs. -

To implement our own fault policy, we'll add a new Rust

structwhich represents our policy: above the line displayed above, add a line#![allow(unused)] fn main() { struct LogThenStopFaultPolicy {} } -

Next, we'll want to define how our policy works when a fault happens. To do this, we'll add an implementation for a Rust trait

ProcessFaultPolicywhich requires us to add anaction()method that the kernel can call whan a running app faults.#![allow(unused)] fn main() { impl kernel::process::ProcessFaultPolicy for LogThenStopFaultPolicy { fn action(&self, process: &dyn process::Process) -> process::FaultAction { kernel::debug!( "CRITICAL: process {} encountered a fault!", process.get_process_name() ); match process.get_credential() { Some(c) => kernel::debug!("Credentials checked for app: {:?}", c.credential), None => kernel::debug!("WARNING: no credentials verified for faulted app!"), } kernel::debug!("Process has been stopped. Review logs."); process::FaultAction::Stop } } }This now just indicates which process faulted, indicates the credentials (i.e. a code signing signature) that the application was loaded with, and then instructs the user to check the HWRoT logs.

-

Finally, to register this as the fault response we want, replace the previous line starting with

const FAULT_RESPONSE: ...with one that definesFAULT_RESPONSEas an instance of our newLogThenStopFaultPolicy:#![allow(unused)] fn main() { const FAULT_RESPONSE: LogThenStopFaultPolicy = LogThenStopFaultPolicy {}; } -

Finally, run

make installin ourtock/boards/tutorials/nrf52840dk-root-of-trust-tutorial/directory.

Now, when you run the suspicious SRAM dump service, you should see something like the following:

CRITICAL: process org.tockos.tutorials.attestation.suspicious encountered a fault!

WARNING: no credentials verified for faulted app!

Process has been stopped. Review logs.

Out of the box, Tock was able to provide runtime application isolation between our encryption service and a malicious SRAM dumping application, and in just a few lines of code, we were able to customize our kernel's response to application faults to match our production HWRoT use case.

In the next submodule, we'll explore one aspect of Tock's compile-time kernel-level isolation guarantees: capabilities.

Kernel Attacks on the Encryption Service

In this last submodule of the HWRoT course, we'll explore how Tock's kernel-level isolation mechanisms help protect sensitive operations in a HWRoT context.

Our previous attempt at an attack on the HWRoT encryption service—an SRAM dumping attack—assumed that we were able to load a malicious application. As we saw, Tock's process-level isolation guarantees prevented the malicious application from being able to compromise other processes.

But what if the attacker tries to compromise the kernel itself? To give our attacker even more of an advantage this time, let's assume that a hypothetical attacker of our HWRoT might try slip some questionable logic into a kernel driver, and see how Tock provides defense-in-depth via language-based isolation at the driver level.

NOTE: For a full description of Tock's threat model and what forms of isolation it's intended to provide, see the Tock Threat Model page elsewhere in the Tock Book.

Background

Rust Traits and Generics

The Rust programming language (which the Tock kernel is written in) allows for defining methods on structs and enums, similar to class methods in many languages.

Following this analogy, Rust traits are like interfaces in other languages:

they let you specify shared behavior between types. For instance, the Clone

trait in Rust roughly looks like

#![allow(unused)] fn main() { pub trait Clone { fn clone(&self) -> Self; } }

which indicates that any type that implements the Clone trait needs to provide

an implementation of clone returning something of its own type (Self) given

a reference to itself (&self). Implementations are provided in impl blocks:

for instance, to implement the above trait, you might write something like

#![allow(unused)] fn main() { struct MyStruct { ... } impl Clone for MyStruct { fn clone(&self) -> Self { ... } } }

Types can be bound by traits: for instance, a function signature like

#![allow(unused)] fn main() { fn duplicate<C: Clone>(value: C) { ... } }

indicates that duplicate() is defined to be generic over any type C such

that C implements the Clone trait, and that the input to duplicate() will

be of this type C.

As a last note, traits can be marked as unsafe to denote that any

implementation of such a trait may need to rely on invariants that the Rust

compiler can't verify. One common example is the Sync trait, which types can

implement to indicate that they're safe to share between threads.

Because such traits can't be compiler-verified, the Rust compiler requires

implementations of them to be marked as unsafe as well, e.g.

#![allow(unused)] fn main() { struct MyStruct { ... } unsafe impl Send for MyStruct {} }

Submodule Overview

Our goal in this submodule is to modify an existing kernel capsule to "slip in" a function call that a (malicious) userspace app can trigger that compromises the overall system integrity. To make this a subtle attack, the attacker wants to hide this new function call in the kernel so that when the board maintainer updates to a new version of Tock the attack is present in the kernel.

For demonstration, we will insert a call to hardfault_all_apps(). This is of

course a sensitive API designed exclusively for testing. This API should not be

accessible to userspace, but we will see if an attacker can expose this to

userspace without the board maintainer knowing about the change.

Milestones

We additionally have two small milestones in this section: one to sneak some logic into our encryption oracle driver, and then one to add an application which uses it.

- Milestone one adds a minimal bit of logic to the encryption oracle driver which a userspace application can use to fault all running applications, but with the caveat that it requires the board definition to explicitly give it that permission.

- Milestone two adds a userspace application to trigger this driver, and then demonstrates how Tock performs language-level access control to capabilities which the Tock board definition has to explicitly grant.

Starter Code

Again as in the previous section, we have some starter code in libtock-c. The

only new directory we'll use is the questionable_service/ subdirectory in

libtock-c/examples/tutorials/root_of_trust/.

To launch this 'questionable' service which we'll use to trigger the

fault all processes driver, simply navigate as per the previous submodules to

the Questionable service in the on-device menu, select it, and then select

Start as usual.

Milestone One: Adding the Fault All Processes Driver

As a first step, we'll need to add some logic to our encryption oracle capsule.

Open tock/capsules/extra/src/tutorials/encryption_oracle_chkpt5.rs (the

completed encryption oracle driver) and do the following:

-

First, we need to ensure our compromised driver has a reference to the kernel, as well as a capability of generic type

C. This capability will be necessary in a second, but for now we take it for granted. Down where theEncryptionOracleDriverstruct is, add a new type parameterC: ProcessManagerCapability, and then akernelandcapabilitymember:#![allow(unused)] fn main() { pub struct EncryptionOracleDriver<'a, A: AES128<'a> + AES128Ctr, C: ProcessManagementCapability> { kernel: &'static kernel, capability: C, aes: &'a A, process_grants: Grant< ProcessState, ... >, ... } }Don't forget to add an import for

kernel::capabilities::ProcessManagementCapabilityandkernel::Kernelas well at the top of the file:#![allow(unused)] fn main() { use core::cell::Cell; use kernel::capabilities::ProcessManagementCapability; use kernel::grant::{AllowRoCount, AllowRwCount, Grant, UpcallCount}; ... use kernel::{ErrorCode, Kernel}; ... } -

Next, now that we've added a new type parameter to

EncryptionOracleDriver, we'll need to change the implementations of eachimplblock so that enough type parameters are provided to it. In theimplblock just below our newly-modified struct definition, we'll change#![allow(unused)] fn main() { impl<'a, A: AES128<'a> + AES128Ctr> EncryptionOracleDriver<'a, A> { ... } }to

#![allow(unused)] fn main() { impl<'a, A: AES128<'a> + AES128Ctr, C: ProcessManagementCapability> EncryptionOracleDriver<'a, A, C> { ... } }Later in the file, you'll also want to change

#![allow(unused)] fn main() { impl<'a, A: AES128<'a> + AES128Ctr> SyscallDriver for EncryptionOracleDriver<'a, A> { ... } }to

#![allow(unused)] fn main() { impl<'a, A: AES128<'a> + AES128Ctr, C: ProcessManagementCapability> SyscallDriver for EncryptionOracleDriver<'a, A, C> { ... } }and

#![allow(unused)] fn main() { impl<'a, A: AES128<'a> + AES128Ctr> Client<'a> for EncryptionOracleDriver<'a, A> { ... } }to